Utility in Selection

Introduction

The use of assessment techniques hasn’t been studied as extensively as in selection. That’s mainly because selection is the most obvious application for assessments, where straightforward calculations can be applied. Additionally, partly, because we often cling to the idea of a silver bullet—some magical characteristics that may indicate successful candidates. In reality, things are more complicated and require considering both the environment and the individuals. Decades of developing job descriptions and searching for the best-fit candidates have mostly led to misleading conclusions that focusing on magical characteristics is unrealistic. There are several factors to keep in mind when using assessment techniques, which we review here.

Utility’s Standard Approach

The standard approach to an assessment technique’s utility in selection reflects the general appreciation and common sense that if you can predict a person’s performance based on a characteristic, then this characteristic accounts for its utility. For example, if a person scores well on a math test and you decide to hire them for a job that requires math skills based on that score, and that person actually performs well in math, then the test done before hiring served as a useful predictor of their performance.

By looking at a large group of people and using statistics, the way to evaluate an assessment technique’s utility is to analyze the connection between what the technique measures and a professional criterion, such as turnover, production, satisfaction, etc., and to estimate the improvement that can be credited to the assessment technique. If the first likely leads to the second compared to a random decision, then the characteristic assessed by the technique can account for its utility in improving performance.

The formula used for this calculation is called the standard error of measurement. The ratio Sqrt (1-RxR), where R, the validity or correlation coefficient, indicates the size of the error that would result from a random decision. With a correlation of 0.5, the ratio is 87%, indicating that using the assessment technique could reduce random decisions by about 13%. A correlation of 0.5 is uncommon or may reflect a randomly selected process. Typical values for assessment techniques are 0.2 or 0.3, which decrease randomness by 2% or 5%. However, these values are generally considered sufficient for an assessment technique to be part of an automated selection process.

Do people with specific traits excel in certain jobs? Are attractive men and women selling better than others? How much can success be attributed to such a leadership trait compared to others? How does attending such a college improve performance? Over the years, the standard approach to assessment techniques' utility has helped challenge and disprove the most common ideas. What other variables should we consider to better predict and improve performance?

Common sense, regardless of intelligence, isn't enough to predict and improve individual performance. Many characteristics can be assessed and understood, not only on a personal level but also in their environment, based on how this characteristic is valued. Assessment techniques provide insights that, even if not used in automated mass selection processes, help deepen understanding of people, including those who don’t meet certain criteria. They can also assist individuals and their environments in growing together, allowing requirements to be met more quickly or even exceeded.

Mass Selection

When recruiting a large number of candidates for the same position during mass recruitment, even more than in other situations, criteria beyond standard error of measurement and the correlation coefficient must be considered, such as the percentage of people to be discriminated against and the economic value at stake.

Here, utility is addressed through decision theories. Originally developed by Wald [1] for quality control in the industrial sector, those methods were later adapted by Cronbach and Gleser[2], considering the a priori acceptance or rejection of candidates, ultimately leading to success or failure.

Utility Based on Pass Percentage

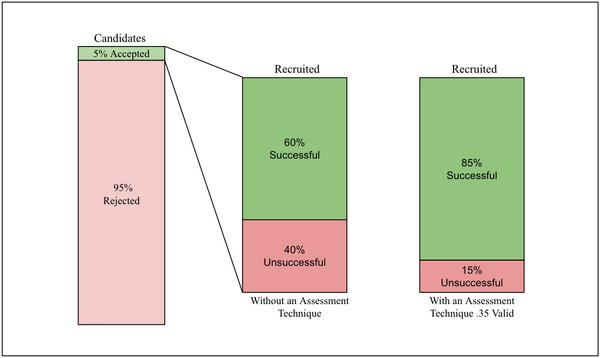

The first utility tables used in selection were established by Taylor and Russell[3] to estimate the net gain in recruitment precision. In addition to the validity coefficient of the assessment technique, this model includes the proportion of participants who should pass with and without answering the assessment technique.

These tables show, for example, that when 5% of candidates are selected for a job with an assessment technique whose validity is 0.35 and in a situation where not using the assessment technique gives 60% success, using the assessment technique improves the success rate by 25%, from 60% to 85%.

Variation in Productivity

Brogden[4] demonstrated that the improvement in employee performance is directly proportional to the validity of the assessment technique, not by focusing on the percentage of people whose performance exceeds a minimum, but by examining the productivity of the selected employees. For example, the improvement resulting from the use of an assessment technique whose validity is 0.5 is 50%. The formula developed by Brogden is as follows:

- Δr = Variation in validity represented by the predictor/criterion correlation coefficient of two assessment techniques implemented. If the reference technique is random selection, whose validity (and correlation coefficient) is zero, then the gain in utility is directly proportional to the validity.

- SD = Standard deviation of staff productivity. Productivity can be measured in different ways, but two methods are typically used: either by measuring the production in monetary value or by measuring the production as a percentage of the average value being produced.

- Z = Average score of selected applicants, in standard scores for the predictor, compared to all applicants. When all applicants are selected, Z=1. The lower the selection ratio, the higher the value of Z.

Other tables were proposed by Naylor & Shine[5], taking into account the variance of the performance measured by the ratio between the best and the worst employees' production. Variants of Brogden's formula exist that allow other parameters to be included, such as employment duration at the company[6] or even the cost of the assessment technique being used.

Ghiselli and Brown simplified the use of utility tables by presenting them in the form of diagrams. Brogden's work was taken up by Schmidt et al.[7], who calculated the financial gains in recruitments for public administrations. The research undertaken by this team included criteria other than economic criteria in hiring decision models, such as people's preferences, values, personality, organizational goals, social values, etc. Considering productivity as being measured by output in monetary value, research shows that the Standard Deviation of Productivity (SDP) is at least 40% of the average salary in the job[8].

For example, if the average annual salary for a given position is $80,000, then the value of SDP is at least $32,000. If performance follows a normal distribution, the person at the 84th percentile produces $32,000 more than someone at the 50th percentile. Between those below the average (16th percentile) and those above (84th percentile), the annual difference is $64,000.

Considering productivity as a percentage of the average value produced, studies show that SDP varies with the level of the position. In these calculations, the value produced by each person is divided by the average value produced by all people at the same level, then multiplied by 100. For unskilled positions, the SDP is 19%; for skilled positions, it’s 32%; and for managerial and professional roles, it’s 48%.[9]. These numbers are averages from all available studies measuring the value produced across different jobs.

Utility and False Rejections

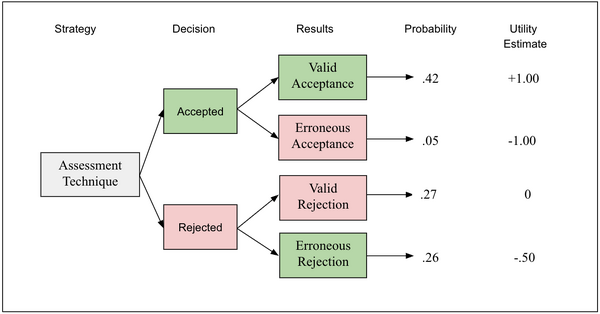

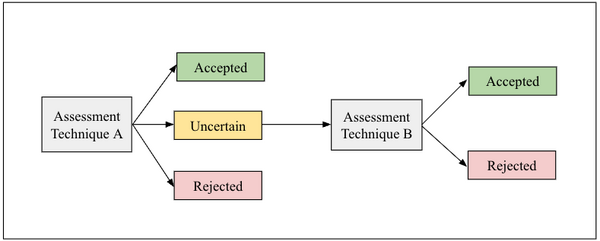

The following diagram illustrates a strategic decision in which a single assessment technique is administered to a group of applicants to accept or reject their application based on the assessment technique’s results. The objective is to maximize the expected utilities across all possibilities. This simple technique to measure the utility of an assessment technique was developed by Boudreau[10], extending on the previously mentioned work of Schmidt and Hunter.

In the above example, the cost of the assessment technique is estimated at 1 on the utility scale. The overall utility of the assessment technique is calculated by multiplying the probability of each outcome by its utility, then adding these products for the four outcomes and subtracting the cost of the assessment technique. In the case illustrated below, the expected utility EU = (.42)(1.00) + (.05)(-1.00) + (.27)(0) + (.26)(-.50) - .10 = +.14. This model suggests that an assessment technique used in selection that can only provide low predictive validity, which is generally the case, gains nothing by being longer and more expensive to administer. In reality, the selection process happens in several steps according to the diagram below, where two techniques, such as biodata, an interview, or a personality assessment, are used one after the other.

In mass recruitments, when several assessment techniques are used whose criterion values are estimated to be known, their results can then be combined using multiple regression equations. Various techniques have been developed to account for correlations between multiple assessment techniques and to enhance the predictive power of the equation from one sample to another, based on error probabilities[11].

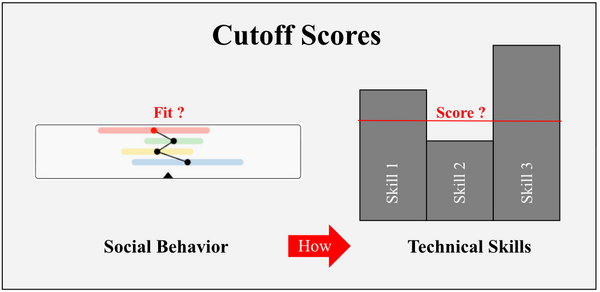

Cutoff Scores

Using multiple cutoff scores helps prevent diluting important and highly rated characteristics with weaker, less relevant ones. For example, a significant deficiency in one skill may go unnoticed if the individual performs well in other skills. If the deficiency is in a skill critical for a specific role, the person is at higher risk of failure. This can be avoided by identifying the essential skills for a position and applying the multiple cutoff method to those skills. For most assessment techniques, it is therefore better to use raw scores rather than multiple cutoff scores regressions[12].

When it comes to social behaviors rather than technical skills expected in the job, as they are measured with GRI’s adaptive profiles, cutoff scores apply similarly by considering the percent-fit with the job’s profile and admissible scores, factor by factor. Selection decisions are made based on a candidate’s ability to adapt alongside the technical skills they eventually have. The adaptive profile indicates how the skills are expressed and developed, inviting a selection decision based not just on the already acquired skills, but on the person's and the company's growth capabilities as well.

One-person Selection

When the selection process is for a single individual rather than many, as with CEOs and other executives, the candidate evaluation involves multiple assessors and various techniques. Several factors influence the process. Utility equations used in mass recruitment have no applicability in this case. Ultimately, the assessors’ analysis and regressions based on multiple characteristics become the most effective decision-making process[13].

Particularly for a one-person selection, the validity of a technique cannot be confused with the validity of the selection decision, which relies on a combination of characteristics being assessed. A selection decision is based on an inference made about a future event[14]. It is critical to consider the criteria by which the person’s performance will be assessed. A focus on how the person performs in the job, rather than or in addition to what is expected to be delivered, invites considering the multiple variables at play, and finding creative solutions to correct individual actions, and the outcomes that come along.

Moderating Variables

Importantly, utility calculations in selection must be moderated by factors such as the candidate's motivation and interest. When candidates are genuinely interested and highly motivated by a job, they tend to show strong correlations between aptitude tests, for example, and job performance[15]. When they are not motivated to complete an assessment, it is unreasonable to expect good results from them.

The utility of an assessment technique in selection depends not only on its intrinsic characteristics but also on the environment that enables candidates to develop and utilize those characteristics.

In automated selection processes involving many candidates, the idea of a moderating variable generally has limited usefulness until more advanced statistical methods and frameworks can account for their effects[16]. Management, which has repeatedly been evidenced as a major moderator for individual performance, must be part of the process.

Notes

- ↑ Wald (1950). Statistical decision function. New York. Wiley.

- ↑ Cronbach, L. J., & Gleser, G. C. (1965). Psychological tests and personnel decisions (2nd ed.). Champaign: University of Illinois Press.

- ↑ Taylor, S. E., & Rusell, J. T. (1939). The relationship of validity coefficients to the practical effectiveness of tests in selection. Discussion and tables. Journal of Applied Psychology, 23, 565-578.

- ↑ Brogden (1946). On the interpretation of the correlation coefficient as a measure of predictive efficiency. Journal of Educational Psychology, 37, 65-76.

- ↑ Naylor, J. C., & Shine, L. C. (1965). A table for determining the increase in mean criterion score obtained by using a selection device; Journal of Industrial Psychology, 3, 33-42.

- ↑ Salgado, J. (1999). Personnel Selection Methods. International Review of Industrial and Organizational Psychology, 14, 1-54.

- ↑ Schmidt, F. L., Hunter, J. E., McKenzie, R. C., & Mudrow, T. W. (1979). Impact of valid selection procedures on workforce productivity. Journal of Applied Psychology, 64, 609-626.

- ↑ Schmidt, F. L., Hunter J. E. (1983). Individual differences in productivity: an empirical test of estimates derived from studies of selection procedure utility. Journal of Applied Psychology, 68, 407-415.

Schmidt, F. L., Mack, M. J. & Hunter, J. E. (1984). Selection utility in the occupation of U.S. Park Ranger for three modes of test use. Journal of Applied Psychology, 69, 490-497. - ↑ Hunter, J. E., Schmidt, F. L. & Judiesch, M. K. (1990). Individual differences in output variability as a function of Job complexity. Journal of Applied Psychology, 75, 28-42.

- ↑ Boudreau, J. W. (1991). Utility analysis for decisions in human resource management. In M. D. Dunette & L. M. Hough (Eds.), Handbook of industrial and organisational psychology (2nd ed., Vol. 2, pp. 621-745). Palo Alto, CA: Consulting Psychologists Press.

- ↑ Dunnette, M. D., & Borman, W. C. (1979). Personnel selection and classification systems, Annual Review of Psychology, 30, 477-525.

- ↑ Coward, W. M., & Sackett, P. R. (1990). Linearity of ability-performance relationships: A reconfirmation; Journal of Applied Psychology, 73, 297-300.

- ↑ Goldberg L. R. (1991). Human Mind versus Regression Equations: Five Contrasts. In « Thinking Clearly about Psychology» edited by Cicchetti D. and Grove W. M.. University of Minnesota Press.

- ↑ Hogan, J., Hogan, R. (1998). Theoretical frameworks for assessment, chapter 2 in Jeanneret R. & Sizer R.; Individual psychological assessment, San Francisco, Jossey Bass.

- ↑ Ghiselli, E. E. (1960). The prediction of predictability. Educational and Psychological Measurement, 20, 3-8.

Ghiselli, E. E. (1968). Interaction of traits and motivational factors in the determination of the success of managers. Journal of Applied Psychology, 52, 480-483. - ↑ Morris, J. H., Sherman, J. D., & Mansfield, E. R. (1986). Failures to detect moderating effects with ordinary tests suppresses moderated multiple regressions: Some reasons and a remedy. Psychological Bulletin, 99, 282-288.